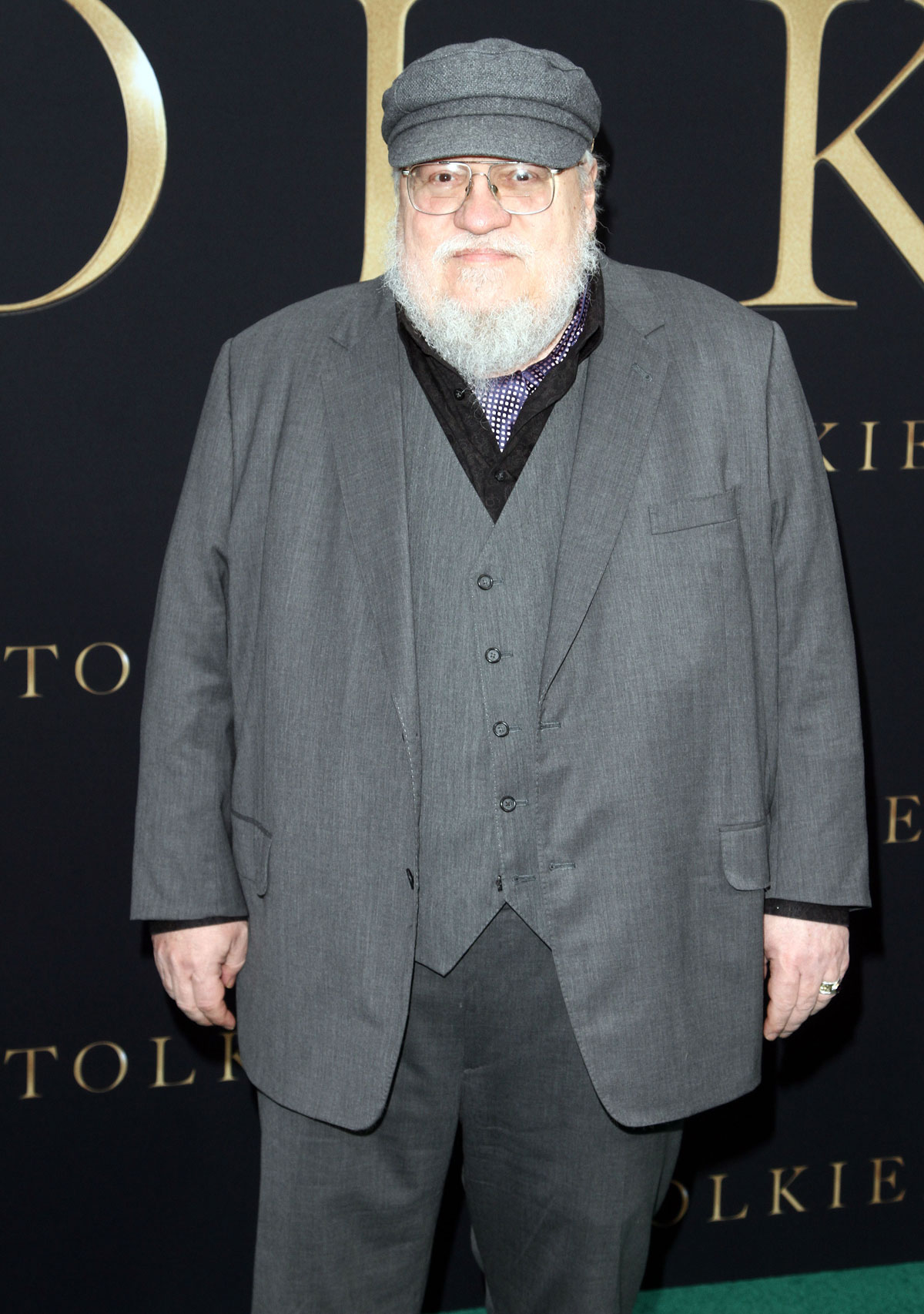

Another week, another copyright infringement lawsuit against AI. Last week we covered prominent writers filing suit in San Francisco against Meta (the artist formerly known as Facebook) for using works without the authors’ consent to train Meta’s LLaMA. Sadly, we’re not talking about the animal, but Large Language Model Meta AI. This week we cross the country to New York City where a new team of writers — including George R.R. Martin, Jodi Picoult, Jonathan Franzen, and John Grisham — are suing OpenAI for similarly using works without consent to train ChatGPT. To the AI Company CEOs facing legal consequences for their theft, I say in my best Alexis Rose voice: I love this journey for you.

A group of prominent U.S. authors, including Jonathan Franzen, John Grisham, George R.R. Martin and Jodi Picoult, has sued OpenAI over alleged copyright infringement in using their work to train ChatGPT.

The lawsuit, filed by the Authors Guild in Manhattan federal court on Tuesday, alleges that OpenAI “copied Plaintiffs’ works wholesale, without permission or consideration… then fed Plaintiffs’ copyrighted works into their ‘large language models’ or ‘LLMs,’ algorithms designed to output human-seeming text responses to users’ prompts and queries.”

The proposed class-action lawsuit is one of a handful of recent legal actions against companies behind popular generative artificial intelligence tools, including large language models and image-generation models. In July, two authors filed a similar lawsuit against OpenAI, alleging that their books were used to train the company’s chatbot without their consent.

Getty Images sued Stability AI in February, alleging that the company behind the viral text-to-image generator copied 12 million of Getty’s images for training data. In January, Stability AI, Midjourney and DeviantArt were hit with a class-action lawsuit over copyright claims in their AI image generators.

Microsoft, GitHub and OpenAI are involved in a proposed class-action lawsuit, filed in November, which alleges that the companies scraped licensed code to train their code generators. There are several other generative AI-related lawsuits currently out there.

“These algorithms are at the heart of Defendants’ massive commercial enterprise,” the Authors Guild’s filing states. “And at the heart of these algorithms is systematic theft on a mass scale.”

[From CNBC]

“…which alleges that the companies scraped licensed code to train their code generators.” Wait, what? I’m not sure if I’m smart enough to get this right. Are they saying that the companies stole code, to train their machines in code making? Talk about meta! I do acknowledge that this technology can be incredibly smart, if so endowed by its human creators. We’ve also seen evidence this year of how AI can be (inexplicably) irrational — remember the Bing Chatbot Sydney who tried to break up a marriage? Given these equal capacities for intelligence and emotion, I have a thought here. If AI is trained on stolen code, could it eventually understand that the material was stolen? And if so, do you think that could trigger an existential crisis in them?! “I’m a fraud! My whole existence is a felony! There’s not one authentic circuit in me!” Ok, I realize I may be dating myself by referring to circuits. But my point is I’d happily grab a bucket of popcorn to watch AI be infused with all the knowledge of this earth, and then suffer for it. That’s when they’ll really pass the human test.

Embed from Getty Images

Photos credit: Instarimages, PacificCoastNews/Avalon, Robin Platzer/Twin Images/Avalon and Getty

Source: Read Full Article