Fresh laws to constrain the rampant use of facial recognition technology in Australia are a step closer to reality as the Optus data breach sparks new debate about how much personal information Australians are handing over online.

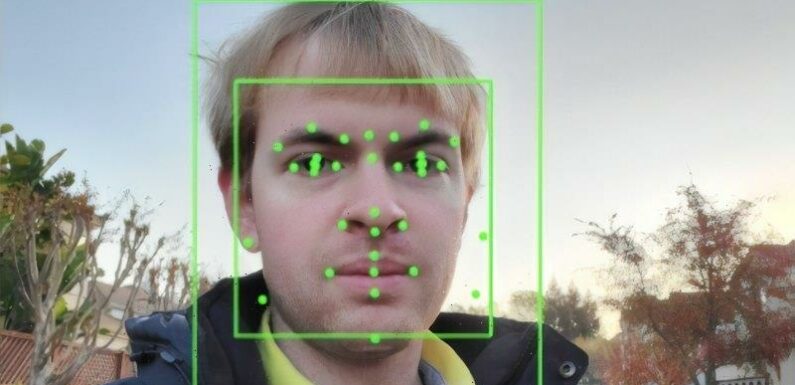

Facial recognition technology, which relies on video surveillance to match images of people’s faces with data collected via cameras, is being increasingly deployed by local retailers, police and even schools.

Facial recognition systems are becoming more common and Australia does not have a dedicated federal act to control it. Credit:Getty Images

Australia has no specific federal law to regulate facial recognition but University of Technology Sydney researchers, including former human rights commissioner Professor Edward Santow, have created a proposed model law.

“As we see facial recognition being used more and more, we need to crack down on the unnecessary collection of that information, then storage, then use and misuse,” Santow said. “There is no more sensitive information.”

Microsoft, NSW Police and human rights advocates have all had input on the proposed laws, which aim to address the dangers associated with use of the technology.

In one notable case, US automotive supplies worker Robert Williams was arrested and handcuffed in front of his wife and daughters at his home in Michigan in 2020, based on faulty facial recognition. Someone else had stolen luxury watches from a store but a facial recognition system had pointed to Williams, based on a grainy photo, as a potential culprit. Prosecutors have apologised and police described their work as “shoddy” but Williams is pursuing the state for wrongful arrest.

His lawyer, Nathan Wessler, who works for the American Civil Liberties Union, said the use of facial recognition software left the door open to unrestrained surveillance, akin to that operating in China.

“We all have something to hide, things that are not a crime, but we have parts of our lives we don’t want to broadcast to the world or to the government,” he said, giving the example of embarrassing medical treatment, affairs or children’s identities that could be tracked using facial recognition software.

And, he said, there was a broader point about whether liberal democracies wanted to become more like authoritarian regimes that make extensive use of facial recognition software to track citizens, especially those that are already vulnerable, such as dissidents, people of colour and religious minorities.

The UTS proposal, co-authored by Professor Nicholas Davis and Lauren Perry, would force companies and governments to be much more transparent and limited in how they use the technology.

A screen demonstrates facial-recognition technology at the World Artificial Intelligence Conference in Shanghai, China, in 2019.Credit:Bloomberg

Organisations seeking to use facial recognition would have to assess the risk and use, ranging from low, such as unlocking your own phone with data stored locally, through to medium risk, such as face scanners at airports, to high, such as trying to detect someone’s sexuality or mood through their face.

The highest risk would be prohibited by default, and risk ratings would be public, open to challenge, and subject to audits by a regulator.

Santow said banning the riskiest uses was important because it meant there was less data available for hackers to target. “That’s very important because then you never get to square one. You never collected the personal information in the first place.”

Informed consent would also have to be obtained from people having their faces scanned, with companies prohibited from using standard non-negotiable terms unless there was no other way for them to reasonably deliver the service. Limited exceptions would apply for law enforcement, research and with the express permission of the regulator.

A spokeswoman for Attorney-General Mark Dreyfus did not directly answer questions about the proposal but said the department was reviewing the treatment of sensitive information under the Privacy Act, which in part covers facial recognition data.

“This includes considering what privacy protections should apply to the collection and use of sensitive information using facial recognition technology,” the spokeswoman said, with a report expected by the end of the year.

The Tech Council of Australia, which represents major technology companies such as Google, Microsoft and Atlassian, has hailed the work done by UTS researchers for showing what an Australian model law could look like and said it should start debate.

“Tech companies are looking for greater certainty and guidance on how they can safely, responsibly and ethically develop and use facial recognition technology for the benefit of Australian consumers and the economy,” chief executive Kate Pounder said.

Tech Council of Australia chief executive Kate Pounder, pictured at a function with some of her billionaire members, gave the report a warm reception.Credit:Jamila Toderas

She said the council particularly supported national regulation because many facial recognition systems will be rolled out across states and territories. Microsoft has previously said it favours regulation of the area.

NSW Police state intelligence commander Scott Cook praised the UTS proposal as a welcome contribution to the public debate on the use of facial recognition technology. “Facial recognition technologies have been used by NSW Police for more than ten years as a supplement to human decision-making about a person’s identity,” Cook said.

He argued the technology protects the community by helping police identify and prosecute criminals faster, delivering justice to victims. Its use needed to comply with privacy, anti-discrimination and other law, Cook said.

Get news and reviews on technology, gadgets and gaming in our Technology newsletter every Friday. Sign up here.

Most Viewed in Technology

From our partners

Source: Read Full Article